Intelligence collection, analysis, dissemination Marcelo Hoffmann. 1.* - unedited transcript - What I will say today is something that is based on our experience working on this for a few more years than Doug remembers. I have been at this for about twelve years, but time goes fast. What I will take is the example of the business intelligence center activities. This is a group that has been doing multi-client research and some consulting for some forty plus years. I'll use this as an example of what Doug calls DKR, dynamic knowledge repository and some of the issues that come about from this.

What we have here is seven multi-client programs. You can check them out on the web under future.sri.com. Think of them as seven syndicated research activities. The whole point is to organize these types of activities around customers in ways that they particularly find useful. We have sixty-five or seventy people working on these types of activities. That is also important because we are distributed, our clients are distributed, and that affects the way that we do our business and how we connect with out clients. So we have people in Menlo Park, Princeton, Tokyo, London, around these different activities intelligence transportation systems, psychographics, consumer financial decisions, and learning on demand, media futures, business intelligence program and explorer. I'll limit most of my comments about the explorer program, which is about technology monitoring. It is perhaps the closest to what Doug looks at in terms of intelligence collection, dissemination, that sort of thing. We have found that there are some classic design problems with this.

Given a limited set of resources, you have the dilemma: do you go deeper

or do you go wider in breadth in terms of what you cover for the limited

resources funding people or whatever resources you are dealing with. Then,

how do you do this? What do you collect, how, for whom? I will get

more specific.

These are not rhetorical questions; they are very specific and rather problematic. How do you analyze what you get? For whom? The who are the people is extremely important. Then how do you disseminate? This is also a major issue, and I will use one example, again, under the explorer program as a case. In general the answer to these kinds of issues, we do what is actionable for our clients and in ways that make sense to them. That is highly variable, but we have to do it because there is no other measure.

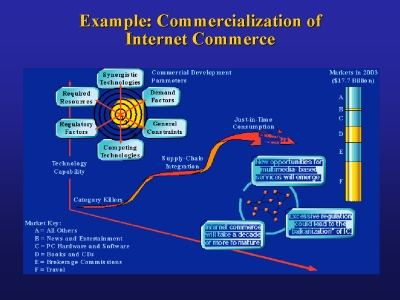

This is a classic example of one of the graphics that we use in one of the multi-client programs. It's how to we disseminate the information, in this case commercialization of Internet commerce itself. We tend to use lots of graphics, which unfortunately don't show up here after many conversions of Mac to Windows to Mac, and so on. If you were to see it you would see that the graphics define something about synergistic technologies, there is a graphic on the left upper hand that is a graphic that shows how far along our different parameters when it comes to commercial development. Things like the resources are higher or lower than average. Factor required for the technology and so on. We try to summarize things as much as we can in a graphical form. This is important because we have clients all over the world, and they tend not to have too much time to spend analyzing lots of text. This is something that I would bring back, if we were thinking about dynamic knowledge repository in the sense of what Doug's says. We have to start thinking what kind of dissemination would we want, how would we and to present things, represent things, and analyze them. We emphasize summarization for executives, visualization and put together for non-English speakers.

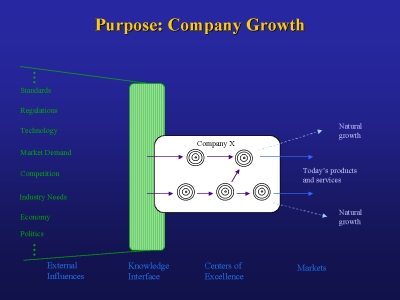

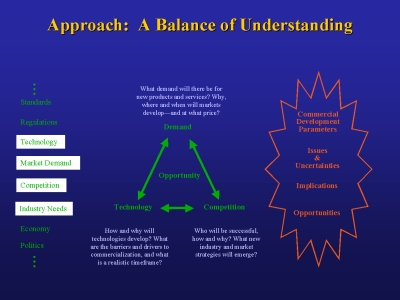

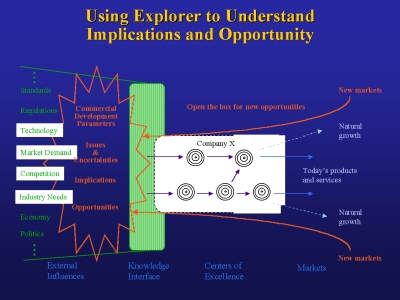

The purpose. Typically the clients we are concerned about growth, do business, and this is generic. If you could see this better, you would see on the left hand side all kinds of inputs to a company. Company X is the white box there then on the right is where would the natural growth be for the particular company. If nothing is done if they don't get additional inputs they would still have some type of growth or lack there of and then the question is what do we provide. I think that this would be pretty much generic for any outside provision of knowledge and understanding to expand their capabilities. We sell what amounts to a balance of understanding between demand, technology, and competition.

Then the question is how do you analyze this combination in ways that make sense? On the right hand side you would see something about commercial development parameters. Basically, the parameters that the company and clients want to see to help them make better decisions. When you superimpose that on top of the existing functioning of the company, the client, then hopefully what you would have is the greater expansion of opportunities on the right hand side.

That is very important to consider in terms of the face matching of

what ever the inputs a company/organization takes in has to match what

decision making styles they have in ways that they better absorb it, use

it and so on. These are not rhetorical questions; I will expand on this

even a little bit better.

The lesson that we have found is quite broad. The collection, analysis, and dissemination are extremely contextual. If I were to take a case of let's say packaging or bottling, it's not good enough to know that a company like Coca-Cola and Pepsi Cola both are interested in bottles and glass. It is more important to know who is in charge of finding this out. What exactly do I need to know, because if not you could find out something that they already know, or they don't care or that is too futuristic or too far back in their time horizon. So timing, frameworks, ways to present information are very important. We have found that we have to continuously evolve both the content of what we are doing, how we present it, and in what styles. As far as that goes we have found that where as we used to publish lots of text, now a days we have got more graphic, much more use of the Internet, the web, presentations and so on. People have less time to digest text so they want us to do things faster in sound bits just about. So we do more presentations, we do much more interactions. So I think that should be something that is considered if one were thinking of intelligence collection with regard to bootstrapping and the DKR. What kinds of multi-media would be appropriate for whom, and under what context? One of the challenges we have found ongoing is how do you define a multi-client universe. Who all needs to get what kind of information? If you make it too broad, then no body is interested. If you make it too deep, then very few people are interested. There is literally an art to define what is the collective base that you are going to study collectively. Just for example in our multi-client designs it might take us six months, sometimes a year to actually define this and go back with clients and find out what is needed, what isn't, how and so on. It is a really difficult exercise. Often times, what you find is not what you'd expected. We have a case sometime back when we decided that the environment was really a big deal. Around 1995. What we found was the definition of the environment was very different for everybody that we talked to. There was no commonality that we could find, that we could study, and sell as a multi-client. I would not be surprised that this will happen many times over again. Semantics and syntax are very problematic. You don't realize that until you actually get in and say ok what if we give you this, would you want it? Often times you get the answer yes, maybe and then you come back and say would you pay for it? You might get a different answer at that point, for different reasons. So these things are not easy to define, you literally have to go through the exercise. Another thing we have found is to understand who the clients may be, you have to go through specific individuals. It's very difficult to give generic titles, you know, that marketers would want this. Actually you have to find out whom, and what specifics do they want. Does that match with somebody else's, and enough of a universe to make a syndicated research activity worth pursuing. Other than that, the other challenge that we have found is to improve the intelligence collection you need feedback. In order to get better at it you have to find out what are you doing well, what are you not doing well. It is very problematic. We tend to get that at Hawk although we try to get as much information from our clients as we can. It is always difficult and you always get partial information, and I would also like to suggest that even for this colloquium. Although the organizers including myself have been trying to get feed back, it is always difficult because those who would benefit don't always want to spend the time giving feedback. So there is the dislocation there. You usually end up doing what you think is right, then get some sort of feedback. You kind of go along but you don't optimize. That is pretty much what I have, except one other lesson that we picked up that is quite interesting. There is a difference between those who pay and those who use. They often do not match. What you get in terms of request from one is not what the other one wanted. You end up bi-fracting your responses. You get into a sort of schizoid situation that is somewhat problematic but you have to accept it, go with it, and try to satisfy both. That is pretty much what I have.

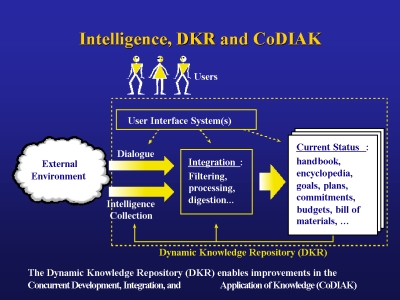

I am hoping that we will have this discussion further on in terms of intelligence collection with respect to the DKR, and CoDiak. That it is something that is a practice that you cannot define before hand, and it is like a sport. You get better when you do it.

---

Above space serves to put hyperlinked

targets at the top of the window

|

Fig. 1

Fig. 1 Fig. 2

Fig. 2 Fig. 3

Fig. 3 Fig. 4

Fig. 4 Fig. 5

Fig. 5 Fig. 6

Fig. 6