Benchmarking to improve management practices Norman McEachron. 1.* - unedited transcript - One of the things that Doug mentioned in his remarks, that I found interesting the importance of in effect learning from the activities and success of today, and in fact running experiments. Part of the challenge that he mentioned about laboratory scale experiments is the low level of complexity in which the events are taking place and the difficulty in effect of replicating some of the information generated in the social setting there for a larger environment. There is another approach that I have run a lot at SRI over the last twenty years which tries to get information directly from those highly complex environments by, in effect, treating each organization as a series of natural experiments in performance. Trying to identify the good performers from a particular point of view. Say that I am working with a technology company that wants to identify how to do product development more quickly. Which was a classic series of those benchmarking activities in the late 80's and early 90's. So they take a look at companies that seem to be bringing products to market pretty well, and they ask us to do a survey across those companies to say what practices are those companies undertaking that really allows them to bring things to the market more quickly. So what we end up doing is looking at the organizations, discerning what are the critical factors in speed to market, if that is the subject. Then looking at the combinations of practices that seem to characterize those companies that are actually more effective in bringing products to the market more quickly. That is an example of benchmarking. To identify good practices taking

in effect the working organization as a laboratory through comparison.

Rather

than trying to do it through a controlled experiment. That is why I called

it natural experiments. I suppose my spiritual progenerator on this one

is Donald Campbell.

What we are looking at then is identifying best practices as a tool for systematic improvement. If we are looking at how to bring new products to market, that might be sort of a level one. We could equally well, and we have, looked at things like how to manage and improve the quality of the product that you are delivering. Which is in effect looking at how organizations manage in improvement community. So there are various ways in which you can do this. The focus of the best practice can vary from level to level, so this same activity of benchmarking can apply as well to the improvement community as it can to a fundamental practice of delivering products or managing technology. Today, because of this being perhaps the most recent large-scale benchmarking

study, I am going to bring the focus on a particular area of practice which

is the variety of things that companies have found to effectively manage

their technology including the knowledge base for that technologies,

the knowledge repository. The whole focus of benchmarking, it is an interesting

discipline. At one level it is a systematic learning process in which

the learning is shared across the participating organizations, not just

with the sponsoring organizations. It's fundamental that everybody has

to win. A second part of benchmarking that is absolutely critical is that

you have to go in to the organization and observe in a natural setting,

and collect and observe as a third party. You don't just take their

word for it. Sometimes the companies themselves don't realize what is an

astonishingly interesting, and effective, sometimes simple good practice

because they aren't outside their organization looking in. It is particularly

appropriate when you want to look at a target area, that you understand

what the organization that's sponsoring or cosponsoring the study is struggling

with. Once you understand that you understand what is new and different.

This could improve their performance. In that sense there is a lot of learning

that could take place. There is another part of the process that

I want to bring to our attention that is not explicitly on the slide, but

it appears in that second bullet. One of the things that are powerful

in getting change is to convince people that another course of action is

possible.

One of the ways you can do that in a business setting is to look at

a competitor or a company that is admired by your client in another industry,

and look for those practices that an admired company is doing and bring

them back. The reality of those practices combined with the superior

performance of the other organization can overcome the political opposition

that says we have never done it that way around here, we couldn't

possibly consider doing it that way, you are crazy. Part of the challenge

for any consultant is to become credible. As the bible said, my fondest

quote from the bible is the one that says a profit is honored everywhere

except in their own house. Those of you who have tried to achieve change

from with in the organization know exactly how valid that is. One

hundred thousand percent. So to get credence, you have to in effect, line

up your evidence. One of the best ways to get credible evidence from

outside the organization. This is our approach to taking this both research

and learning process on the one side, and political convincing, communication

process on the other side, and making it a dynamic, living thing, that

generates useful results. We have it lined up as a five step and

it all looks nice and digested, but believe me, doing it in the real world

it is anything but linear.

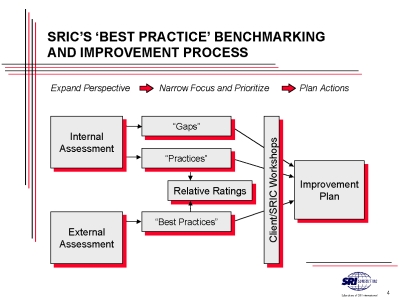

There is a lot of interaction that takes places, and a lot of dispute. In some ways if you are engaged in the benchmarking process with a hot passionate involvement by various parts of the organization in understanding what is going to be done, you got a pretty good idea that there are some stakeholders out there that are really going to pay attention to the results. The thing that you don't want is a study that doesn't have any passion in it at all. Somewhat a kin to our previous speaker. So assessing the client situation, we have these dry words called diagnostic interviews and gaps that is where you step in to the organizations with some background. The kinds of things that come about from having done this a lot. So you have both the perspective of the past in good performance, but some additional points of view and critical questions. Begin to identify areas of potential weakness and gaps in the organizations and start generating some controversy and involvement. Then you get a sense of what the most critical tasks are. At that stage you are trying to illicit the doubts, questions, and challenges that you know you will face at the end, but you identify them up front so that you can go out there and collect the evidence to address them. You don't want to be blind sighted at the end by a question that didn't come up at the beginning. The second thing that you do is to develop a compendium of the relevant practices that address those gaps, issues, and challenges. You can start from an experience base, but it is very often the case that the most critical information is the freshest from the companies that are regarded politically from opinion leaders as being the best from the standpoint of leadership. So you get collecting information with those technology leaders and feeding back similar kinds of profiles to them so that everyone is a winner. Rating the performance against others. This is often the most challenging part from a standpoint of the science of it. In effect, this is a very rich set of indicators, evidence, and observations that you end up with, and having to make those assessments is not an analytical task with survey questionnaires and standardized responses. It is heavily dependant on judgment. So a lot of the richness and experience base of the benchmarking team goes into this rating performance. That is one place where we found it is critical that every benchmarking discussion or interview is done by two people, two observers. One of them may take the role of asking the questions, and the other will take the role of recording the answers. There are two independent viewpoints present, as to what is being said and what are the critical follow on issues to address. So you at least have two points of view. Then when you do that rating you come back and work together as a team, to understand the assessment that is involved. The fourth step is converting the results to an action plan that is appropriate for the organization that paid the money for the study to be done in the first place. There the critical question is transferability. In what circumstances do those best practices that you found in other organizations work and understanding the relevance or non-relevance of them to the client organization. Then there is developing the action plan. Even though I spend very little time on that here, that can be a critical step. So here is the flow chart on how this process works.

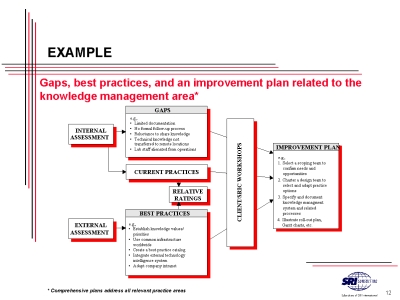

You start with the internal assessment, looking at the gaps and the existing practices that can lead to those gaps. Then, take that information to guide the formulation of your external assessment looking for the appropriate practices that have achieved superior performance by some concrete measurement. Sometimes you can actually form metrics that is beyond just the pure business success of the organization. Other times it is just a judgment call. Then bringing those relative ratings together to compare the company with it's other organizations in the survey.

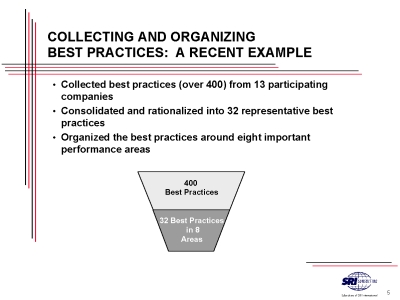

This is an example of good practices in effect we should have probably said that we collected the best from each of the companies over four hundred practices from thirteen different participating companies. Most of which are household names in the U.S. In pharmaceutical, electronics, petrochemicals, oil exploration and production. The sponsoring organization was in Oil Company, but had the wisdom to look outside the oil industry, because much of what they were interested in was done better was in other industries. Then we organize the performance around eight critical areas.

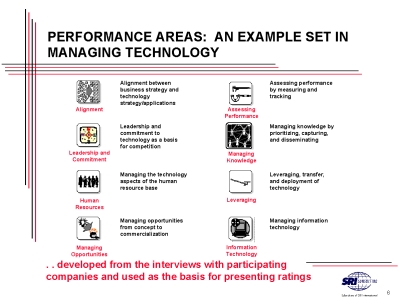

These were the areas, each of these had on average about four excellent practices that were associated with them. You begin to see here some of the themes I think, Doug, that you have been talking about in terms of organization, alignment and direction, leadership, managing the human resource base, information, leveraging and employing in technology info, managing, capturing, deploying business knowledge as well. Assessing and tracking performance. These areas were basically developed as a construct for saying what do these four hundred practices really boil down to? Which are the best of the best and how do they fit together into a structure? This is our current representation at SRI of the eight critical areas for managing technology effectively. It is just an illustration; you can do a benchmark on any number of different things. One thing I might comment on, in every benchmark that we have done, there are certain common elements that appear. Leadership is one, alignment is another. Alignment means the correlation between the actives done on a business level, technical level, on a marketing level. Is the organization all pointing in the same direction? Or is R&D off doing stuff over here while the company management thinks it's over here. I remember a classic misalignment case at a company that we all know that is headquartered in St. Louis where they had a group doing research on a new process for manufacturing polyethylene, and it was a very capital intensive process, required at that time which was the early 80's about 800 million dollars in capital investment to carry it into operation. It turned out that five years before the completion of their research, the board of directors of the company had decided that they weren't going to make any more capital investments in that industry, but nobody had communicated that to the dozens of researchers who were diligently pursuing this research activity. It became a total loss and a devastation to careers of several of those leading professionals because the parent company sold off that unit with out being able to gain much from that technology. From an evaluation viewpoint. Alignment is one of those issues. Another one that has become much more prominent in the last five years which is consistent with what we are talking about here is the knowledge management arena. It's probably the fastest growing cross industry concern. What do we have as intellectual capital not just property in the sense of documented and legally controlled, but also intellectual capital in people's heads and how do we identify it and bring it to bare where we need it. As one book title I just love says, I wish if only we knew (conscious, deployable) what we know (unconscious, not deployed, somewhere). So that issue is very relevant as well in the benchmarking arena because in effect internal benchmarking helps you know what you know.

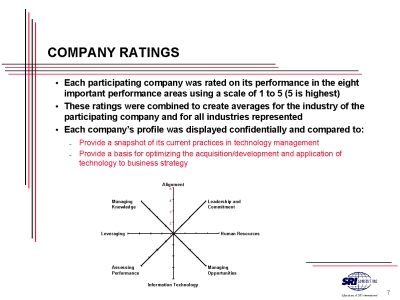

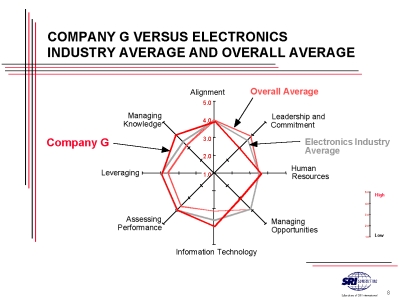

So when we do these company ratings we are, as I mentioned before, using a team judgment process but we find it very helpful and sometimes highly controversial (controversy is good in this business because it involves people) to put it together in a graphical form. So we identify the key elements, which are shown here as a radar diagram, then we look at how companies rate compared to their industry.

Here is company G in the electronics industry vs. the industry average. The company itself, it's interesting, was good in managing knowledge compared to the industry average, but was having some struggles in respect to leadership and commitment and managing opportunities. In a couple of cases it had fumbled the ball on some new technology. So the process that was required to change, interestingly enough, wasn't located primarily in the technical arena. They knew about those technologies, but in the management process that made decisions about how to use that technical knowledge. Now here is a comparison that shows how best practices even help when your dealing with a company that is pretty good. Company G relative to the electronic industry wasn't too bad.

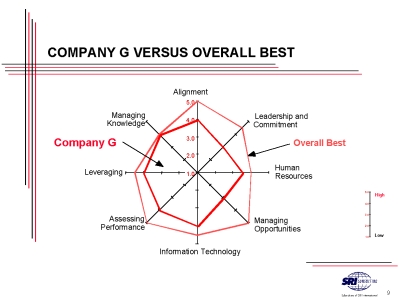

When you start comparing it with the best of the best, in other words when you take the out around of all the practices and ratings of all of the scales, you will see there is some real opportunities for improvement in nearly all of the areas. The one where that wasn't true is where the company set the standard, which happens to be in managing knowledge internally with its customers and suppliers. So, one of the things that happens in benchmarking is that everybody can win. The best company in the sample always finds that it can still learn things from somebody else, because it is never best in everything in every area at the same time.

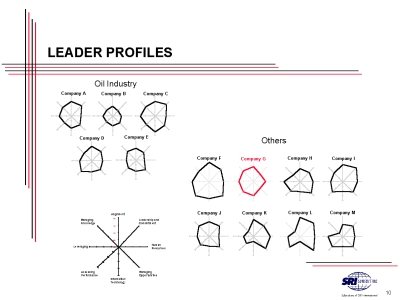

So here are examples that show how that chart is a useful communication tool. You see company G there and you also see the best company in the sample right next to it, which was company F happened to also be in the electronics industry and a household name. One of the interesting questions for company F became are we actually trying to be too good in too many areas instead of being focused in the right areas to be good in. So it isn't always the case that you want to be best in every area.

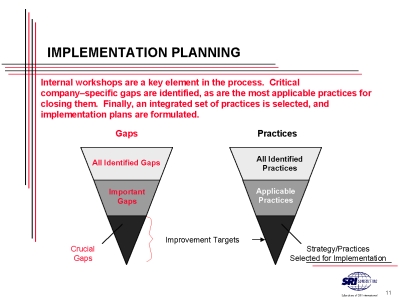

So just to make some comments on the nature of implementation planning, these gaps that we identify at the front end of the process, on the left, these are the critical gaps that we aim at when we try to identify the best practices. Then when we come up with those practices, filter them down to those that are applicable to the company, transferable, adaptable. Then we can identify the improvement targets.

This shows an example of an improvement plan that is related to the knowledge management arena. Looking at some of the gaps that are there some of the best practices that we identified as addressing those gaps, now naturally each bullet has more rich detail that is beneath it. We are keeping it at a summary level. Let me read through what example gaps were with in the original company. Limited documentation, no formal follow up process, this is a typical problem companies neglect to document what they learned from the last project, so they continually make the same mistake on the next project. Reluctance to share knowledge. Does this sound familiar. I got my knowledge; I should share it with Jack? Jack might get a better bonus so why don't I keep the knowledge here and let Jack stew in his own juice. Technical knowledge not transferred to remote locations. This was a particular problem because this was a global company with widely distributed technical exploration and production activities. And laboratory staff alienated from operations. We chose that word carefully because they were really alienated from operations. Best practices establishing knowledge values and priorities. Using common infrastructure worldwide. It sounds like a familiar theme we have been talking about here, about having the ability to share knowledge on a common basis. Create a best practices catalogue both internally, and with respect to external observation. Integrate external technology intelligence system. What's going on out there and what will it tell us about what we need to do and maybe what opportunities we could take by acquisition or purchase, instead of having to do our own technical development. Lastly adapt a company intranet as the platform for distributing this knowledge. So those were the key best practices as of 1998 in terms of this arena of knowledge management. No we have come some distance in the last two years. In many companies these are still critical areas. Maybe with some slightly technical advancement but still critical area. So the improvement plan requires selecting a team chartering a redesign team specifying a knowledge management system and developing a roll out plan. The standard kinds of management activities for implementation. Now, given the best practices, you are in a more solid position to understand what it will do for you and even to have a series of contacts with those companies that have already done that. To say what are some of the lessons learned in implementation since you are not a competitor, but you have also done a knowledge management system, which can help us out, avoid it. So we have seen one of the things from benchmarking is a community of interest in improvement is formed in sharing practices, experiences and implementation over time, which fit with one of the things Doug had mentioned in his introduction as well. Thank you.

---

Above space serves to put hyperlinked

targets at the top of the window

|

Fig. 1

Fig. 1 Fig. 2

Fig. 2 Fig. 3

Fig. 3 Fig. 4

Fig. 4 Fig. 5

Fig. 5 Fig. 6

Fig. 6 Fig. 7

Fig. 7 Fig. 8

Fig. 8 Fig. 9

Fig. 9