The Archimedes Project Neil Scott. 1.* - unedited transcript -

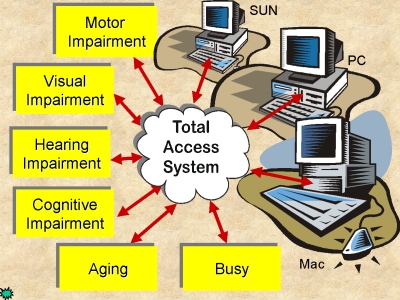

Okay. I'll talk fast. My main work is ensuring everyone has the access to the information. We're creating an infrastructure where information is being made available but if you can't use the tool that are provided like the keyboard and mouse or your eyes you're blocked out. And this is actually very serious in many cases where societies are adopting thing like information kiosks where you get your money or pay your bills or renew your driver's license. Someone who can't use that device is disadvantaged. We started off here at Stanford doing this and we've been getting comments from all around the world saying, "We want to do the same thing." And so I've added to my original thing, which is looking at individual needs, abilities, and preferences is something we have to take care of to add in culture because different countries have different ways of doing things and because we are becoming global we have to take note of that. For many years we made access by modifying the system that people would use.

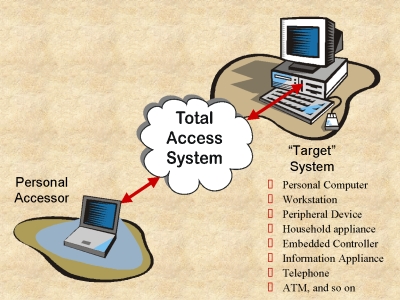

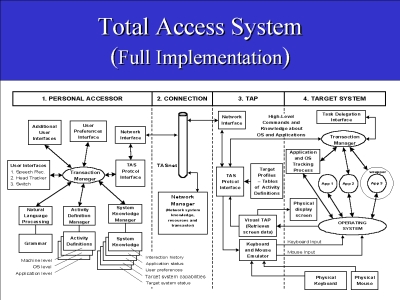

We'd get inside of the computer and change it. That has become totally impractical. You know the computers change faster than we can change them. And also if you change a computer you then have to look at it for the rest of your natural life. It's a problem. So the system that I developed, we called total access system says let's split the problem into two parts. Leave the target system alone and give the person something that allows them to interact with any of the things in the environment. The nearest analogy I've come up with is my spectacles. If I take them off, you will all look really funny. You're sort of blurry. I put them back on and by magic you're there. These are my business. I've been wearing glasses since I was three years old. There are other people here with glasses on. If we swapped their glasses it wouldn't work. Some of the people let's say the infrastructure should look after it. It's like saying every window in the world should be automatically curving to adopt a debt to my needs. It's not going to happen. So we have to allow people to move around and use the things in the environment by bringing with them what makes sense for them to bring. We call that device a personal accessesor. The piece that goes between the personal accessor and the target is a little box where we put the translation material to make this thing operate the keyboard and mouse and read the screen of the target system and turn it into a standard thing here. Now a lot of the computer companies for a long time because looking in that hole every computer looks identical. A sun, a SGI, a PC, a MAC whatever. they all look the same thing because we made them all understand the same set of standardized commands. The idea is very simple. It's one of those things that people see it and say, "Oh that's so simple, I could have done that!" Yeah well.

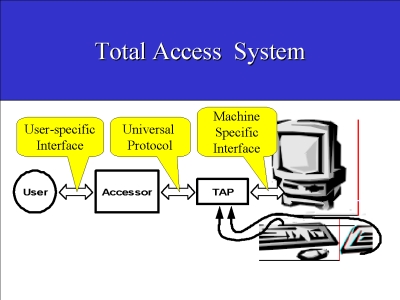

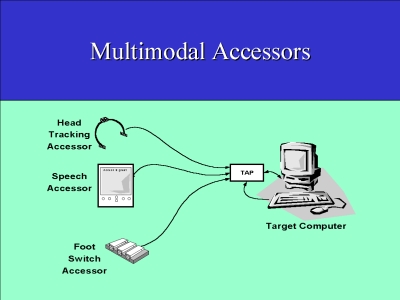

It's a little bit like. doubts things you know, it's obvious once you've done it. But what we are working on is that between the user and the excessive device whatever that might be, is very specific to the user. It's human centric design. It's what does this person need? Between the accessor and the TAP is universal so that any accessor can work with any TAP. Between the TAP and the target machine specific. So the key thing is that we've taken away the knowledge of the machine from the user. They don't need to know. And so.

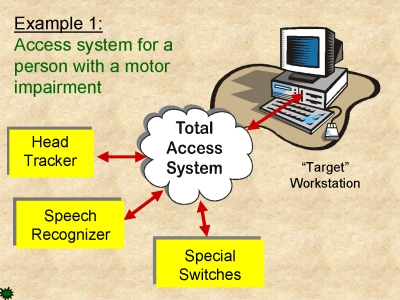

Some quick examples of this in practice a. The person I'm going to show you in the next photograph broke his neck in a diving accident when he was seventeen years old and his system has a speech system running in this notebook.

He has his MAC TAP here connected to a Macintosh just hidden on the off the side of the screen and he has a head tracker. And I defy anyone to use a Macintosh more accurately or more quickly than J.B. He worked with me for about five years and he's at. just finishing an MBA at Berkeley now. I don't know if I'll ever get him back but basically the key thing in working with someone like J.B. was no one ever conscience was aware apart from you people. But the people who worked on the project would. you never thought as J.B. as being any different than anyone else. The only we thing in the morning was we'd slip his headset on. We go the robot to do that. That was their intension but I never got enough faith in the robot to let it. he'd end up wearing the headset on his nose. But the head tracker allowed him to do all mouse functions and his voice allowed him to do everything else; clicking the button whatever. The way we're working is to allow people to mix and match.

Things like head tracking, speech, foot switches, and mouth switches, whatever. But the target system remains totally unchanged. We then had the situation where you know.

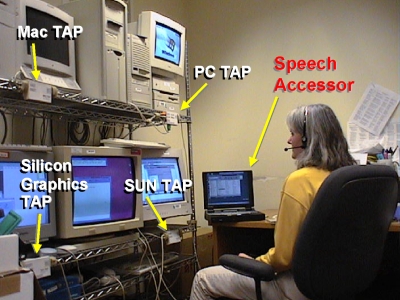

Sun may think that all the computers in the world are SUNs and Microsoft thinks they're all PCs and so on but really there are few others. And we find that Judy who came to us.

She was. She had reached a pretty high level as a programmer here in Silicon valley in some of the big companies and she'd reached the point where she was the person that you'd get when call up with a problem and she'd help you walk through it. But her elbows gave out with tendonitis. So before I met her, she'd been out of work for eighteen months. We had her back up to speed in one week and her job was to workout how we say the same thing to the speech accessor and we'd get the same result on all of these different computers. So at this time all she has to say was talk to the PC and then just say whatever she was doing. Talk to the SUN, do exactly the same thing and exactly the same thing would happen. Eye tracking.

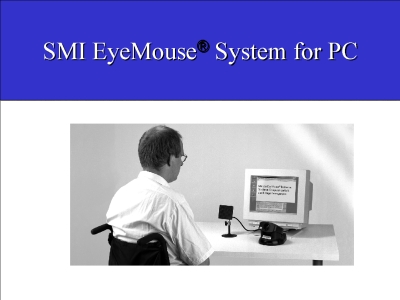

Normal sort of scheme as there is this infra red light source shines

on your eye, you get a different

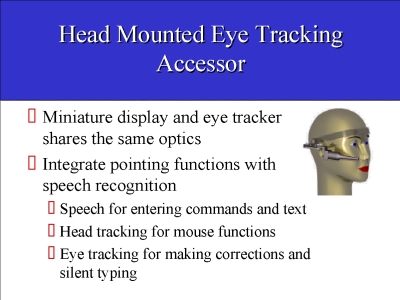

They actually jerk around and our brain is integrating all these images and it's very, very difficult to tie together but we've got we think one of the best eye tracking systems now. We're working with a German company to mount the whole device in a little thing that just sits down on your face and it's head tracking, eye tracking, and voice. Head tracking does the mousing, eye tracking does the selection for menus and things you can do very well and the voice does all of the commands. Humble switch.

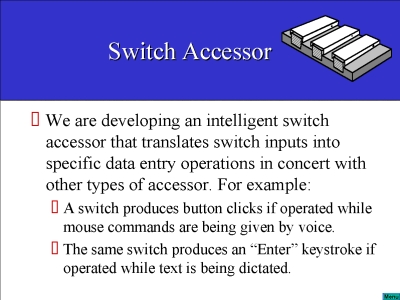

You think of a switch as just a switch. Put a microprocessor in there and teach it the codes and it becomes a very helpful tool because how the accesses can tell the switch, "oh until I tell you otherwise you're a indicate for a SUN or until I tell you otherwise you're the left mouse button on a PC or whatever it might be or I'm going to give a very complicated command and if it screws up, here is what you have to do to undo it. And so you can have a logical forward, a logical undo whatever. Hand Held Accessors.

I can drive a SUN or a PC or a Macintosh totally from my palm pilot.

Never touch the keyboard or

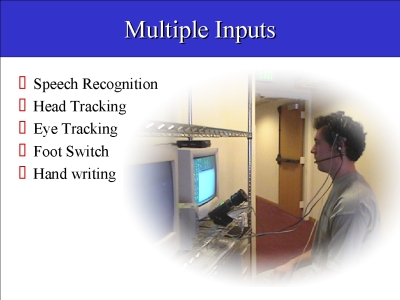

So this is a system where we are working on how to balance out.. Where do you use speech, head tracking, eye tracking, foot switches, hand writing whatever because each does a job well for a person who is disabled, you're pinning them to taking one particular choice. For the rest of us, we can mix and match all the rest. Ben is not disabled. He's a very, very bright program here at Stanford from the. graduating the CS department. But he was using all of these different functions together and we're working out you know. an eye tracker to select from a menu is like magic. Because when you use a mouse, you move the mouse to it then you do something that opens. You then looks at what's happened, move the mouse to it. When you use eye tracking you look at it and it flows open and your eye naturally flows to the next thing and so the menus just ripple. And it's like magic. It really is but a. okay Haptics, blindness.

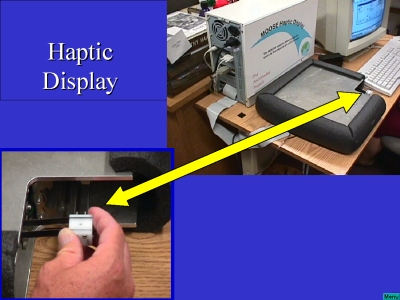

The. What we did for the blind people was basically take people just as qualified as any of us sitting here and turn them out on the street about five or six years ago because they couldn't handle all the new software that came in that was totally graphical. We're working. This prototype of a device called the moose all right, the mouse with muscles. And what it is, it's a mouse that's got two thumping great motors underneath the middle here that let you feel where it is. There's a. It's evolved into a practical mouse that has little motors under here.

It can't compete with a moose in the sense with a moose we can take where we want you to go. With this one it's more psychological sensation of you going there but we're looking at how do we tie this into the system. Including this drawing. This is something that Adam and I worked on for what, six months? Evolving for a grant proposal we where preparing.

And I put it in because this part here, the accessor looks rather like drawing Doug has shown a several of times where you have the user interface coming out. And he had a terminal file and the grammar and so on. Where we're looking towards is having a lot more information about the real world that we're interacting with. But that it's my version of what needs to be done and what's out there and I'm in directing it knows hell of a lot about me, it learns.

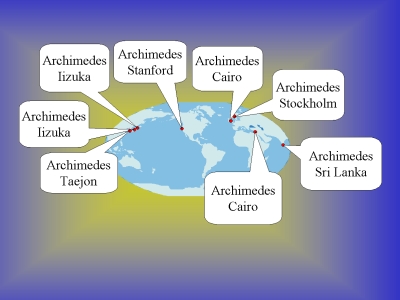

But we still have full control over the systems. Rushing on to the next part which is. That's the piece that goes in the hardware end of it The other part that I've been working on for quite a few years now as. as I said, we started getting inquiries from other counties about, Oh we want an Archimedes project too. We like what you're doing. And currently we're talking with groups all around the world about establishing a similar project.

And the goal is get them up to speed with what we all ready know, help them make it indigenous so that in Shi Lanka for example we may supply some little module but they supply the rest of it to make it an indigenous industry and then get them involved in helping us with the research that need to be done. And. Currently.

I have quite a few Japanese engineers, Sweden, Britain, we have people coming and working on the project here and going home and so we've sort of got the sharing going on all ready but be want to make it more real time distribute around the world.

And I. Looking at all these dots and there are countries I haven't been to yet so. I know for a fact that New Zealand and Australia are going to sign up as soon as I go and tell them. But there are a lot of other places. Ah. If it looks like the basis of developing a NIC of people around the world interested in disability and aging and the technology that goes with it.

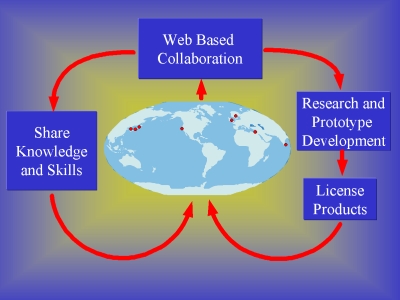

The model that I evolved here is share existing knowledge, get funding, seems to come up all the time, perform academic research by tying in the universities, perform applied technology research by have the places look beyond how do we take the research and make it practical. There is no point in doing it if you don't disseminate it and deliver it and then share the new working knowledge and this is where the feedback comes in.

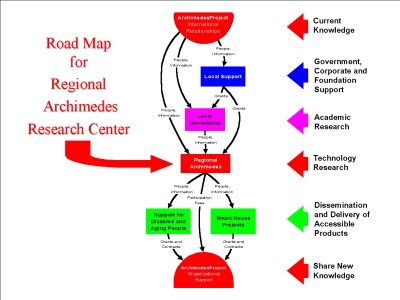

Is that. In this diagram I have here which I use as a road map of when I talk to people about is, I've deliberately duel Archimedes project. We're just. In fact we've finished signing the papers today for starting a Archimedes international which will become the umbrella organization to tie this all together. I deliberately did this two half circles so that it folds back on itself because the feedback but.

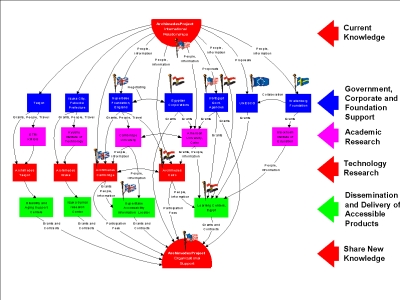

If you run down there you know, it's all the things I just said and just to get you an idea where we're at I haven't, I keep joking with the people that if I get anymore people I got to get a bigger computer.

But this is to give an idea of the network of the different groups that are all ready involved in just what we've been doing so far. Archimedes Cairo is actually starting. We've got one in Isuka City in Japan is starting. We've got several others that are, they are getting the money together to start up and so on. So I see this as a real tangible example of where people can get involved in doing something that has a very good outcome. It's not comparative in the sense that oh I can't work with you because my boss won't let me share any of the stuff I know. So I'm going to go straight to the last page here which is next Thursday.

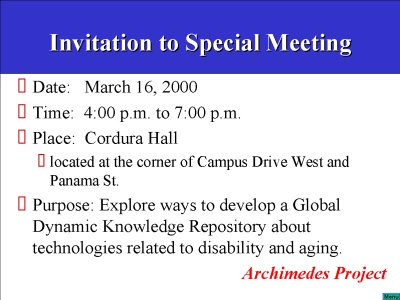

I thought since all you've all been coming at 4:00 on Thursdays, next Thursday anyone who is interested in the possibility of an ongoing involvement and thinking things through you're invited to have a.. to a station at Cordura Hall which is at the other end of Panama St. back by Campus towards the medical center. Purpose is to develop way to develop a global DKR that looks at technologies related to disability and aging. And the thing that I see is that we're all going to need it. And I don't know whether you want to use it as a forum to get the people who maybe interested in the technical side of it. You know we've got a lot of room there we can share it; spread out if people are to. So anyway 4:00, next Thursday, don't break the Patton just come here subconsciously and other side of the street.

---

Above space serves to put hyperlinked

targets at the top of the window

|

Fig. 1

Fig. 1 Fig. 2

Fig. 2 Fig. 3

Fig. 3 Fig. 4

Fig. 4 Fig. 5

Fig. 5 Fig. 6

Fig. 6 Fig. 7

Fig. 7 Fig. 8

Fig. 8 Fig. 9

Fig. 9 Fig. 10

Fig. 10 Fig. 11

Fig. 11 Fig. 12

Fig. 12 Fig. 13

Fig. 13 Fig. 14

Fig. 14 Fig. 15

Fig. 15 Fig. 16

Fig. 16 Fig. 17

Fig. 17 Fig. 18

Fig. 18 Fig. 19

Fig. 19 Fig. 20

Fig. 20 Fig. 21

Fig. 21 Fig. 22

Fig. 22

Fig. 23

Fig. 23 Fig. 24

Fig. 24 Fig. 25

Fig. 25